A Tidy Room is a Happy Room

In mid-December I attended a hackathon on Meta's campus in central London. It was something of a novel experience, as I'm much more used to the kind of events put on by university student bodies. I made some great new friends and enjoyed working with the Quest 3, but more importantly I put some cool wavy grass on the floor of a real room. This post is a technical breakdown of the graphical components that went into the effect.

To begin with, I'll be upfront and say that I did not make the original implementation of the grass - that credit goes to Acerola.

Thanks, Acerola!

It generates chunks of grass positions using a compute shader, which are then used to draw a large number of meshes with Graphics.DrawMeshInstancedIndirect().

The grass mesh is drawn with a vertex shader which lets it move in the wind, and a gradient is calculated along the blades' length to give an impression of 3D lighting.

Chunks which are outside the field of view are culled, saving performance only for those chunks which are visible.

Our application has the user interacting with the grass, so we first needed to fit the grass to the physical room, regardless of its size or shape. For simplicity, I first reduced the grass' footprint to 10x10m, which should be just bigger most reasonable rooms, and is significantly smaller than the terrain it was covering originally. My approach would then be to scale and translate the generated grass positions to get them all inside the limits of the room.

The Quest 3 provides access to the generated mesh of the room at runtime, of which we can get an axis-aligned bounding box with Mesh.bounds.

This gives the actual size of the room, and so this information needs to be passed into the compute shader responsible for generating grass positions.

By using the maximum and minimum limits on the X and Z axes, the required information can all be passed into the shader with a single Vector4.

// GrassChunkPoint.compute

// Original implementation

pos.x = (id.x - (chunkDimension * 0.5f * _NumChunks)) + chunkDimension * _XOffset;

pos.z = (id.y - (chunkDimension * 0.5f * _NumChunks)) + chunkDimension * _YOffset;

// Scale to fit the aspect of the room

float width = _Right - _Left;

float length = _Front - _Back;

pos.x *= width / dimension;

pos.z *= length / dimension;

pos.xz *= (1.0f / scale);

A world UV for each blade of grass is generated at this time, too. In the original implementation this is used for sampling the wind texture and a heightmap. We don't need a heightmap, and the resulting scale artifacts in the wind texture aren't perceptible. However, we would need to use the UV to interact with the grass later, so I needed to transform the generated UV appropriately too.

float uvX = pos.x;

float uvY = pos.z;

// Scale UV to room

uvX *= dimension / width;

uvY *= dimension / length;

// Apply translation after scaling

float offset = dimension * 0.5f * _NumChunks * (1.0f / _NumChunks);

uvX += offset;

uvY += offset;

float2 uv = float2(uvX, uvY) / dimension;

With this I was able to fit the grass to the bounds of the room. It is not an ideal solution, since it is always the same amount of grass scaled to fit into the room. As a result, smaller rooms have denser grass. However, most rooms are about the same order of magnitude in terms of area, so this was functional for our prototype.

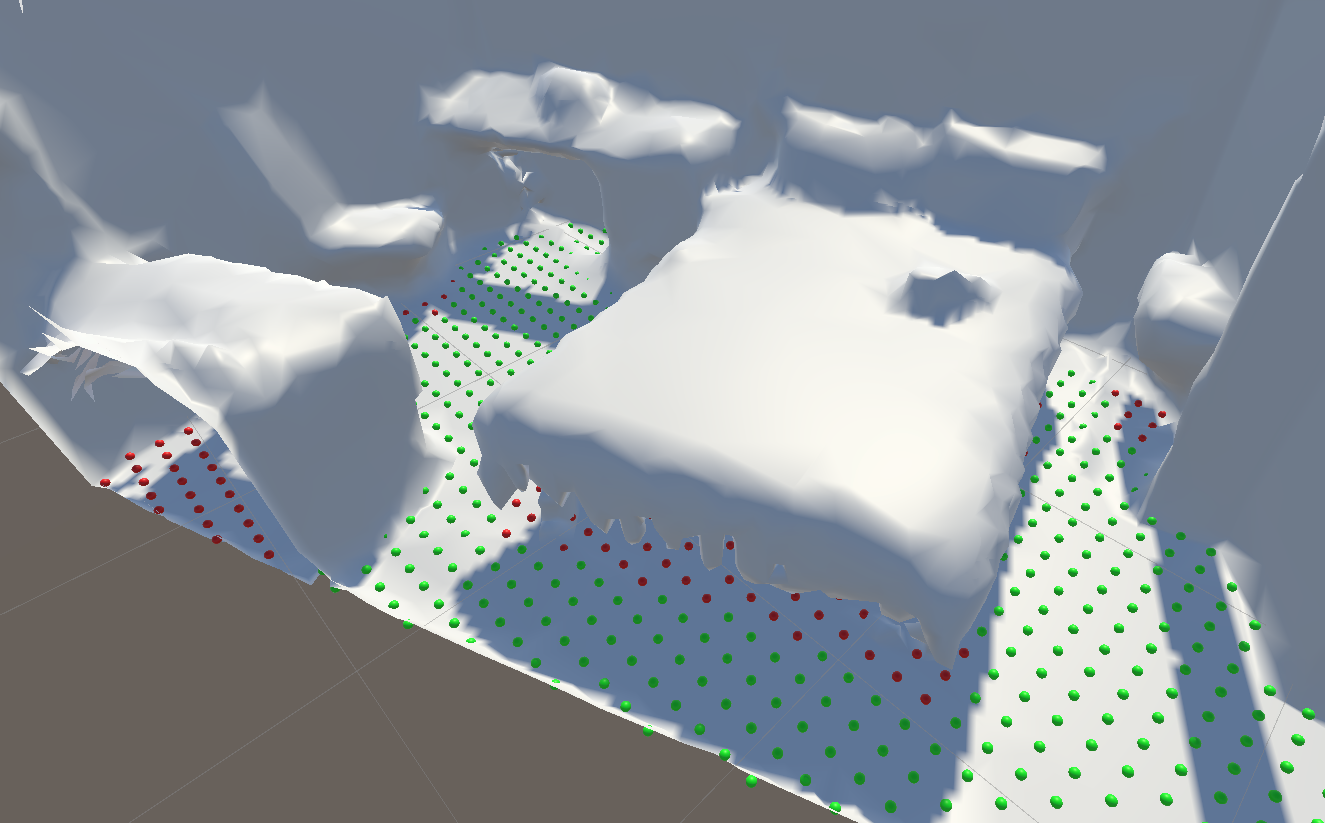

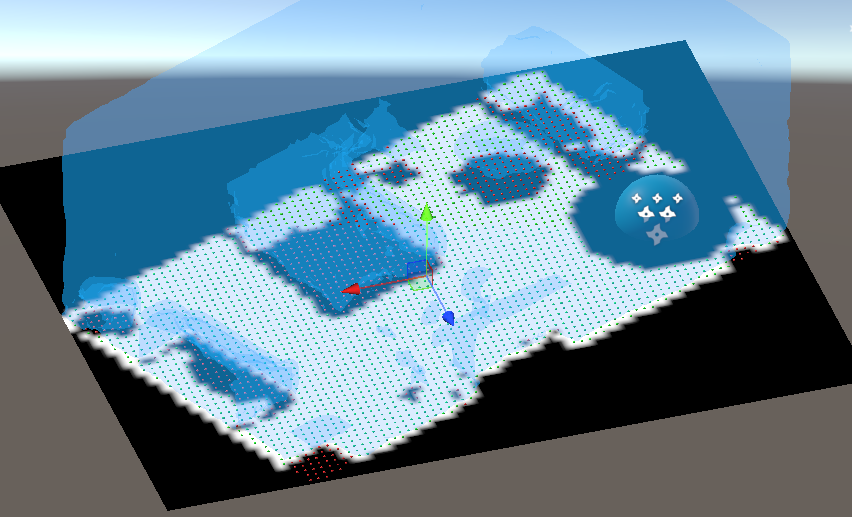

The next problem to solve was interaction. Our mechanic involved cutting the grass. Since the grass was not GameObjects, there was no object to destroy or transform to compare, so we needed another way to relate a position within the room to something the grass could understand. Moritz had created a an array of points covering the floor, taking into account raised parts of the scene mesh to determine where grass ought to be.

We opted to use a render texture to communicate this information to the grass' vertex shader. This approach meant we could write in information about the pre-existing furniture at startup, and use the same technique to update the grass at runtime. UVs are generated for points and used to write into an initially black render texture. Green points should have grass on startup, and so they write a white pixel into the render texture. Everywhere else is initially black, which means the grass should be cut at that location. When points are collected, they write black into the texture, cutting the grass at that location.

The last step was to sample the render texture and use it to remove grass at a particular location. We can sample using the UV which was scaled to the room during position generation. Then we clip the grass based on the read value.

// Sample the texture in the vertex stage to reduce texture lookups

o.grassMap = tex2Dlod(_GrassMap, worldUV);

// ...

// Clip pixels in the fragment stage if the read value is less than white

clip(i.grassMap.x - .99);

That's it for the main moving parts we implemented on top of Acerola's grass for the hackathon. Thanks for reading, and please get in touch if you have any questions!